One of the most promising applications of generative AI in today’s enterprise landscape is automating business processes. These workflows often involve nuanced decisions and inconsistent or unstructured data, making them difficult to automate with traditional logic-based systems.

In this post, we’ll explore how to build a .NET agent using Semantic Kernel and Azure AI Foundry to process employee expense reports based on natural-language policies. This is a practical, hands-on example of how large language models (LLMs) can be embedded into real business applications. To access the code, you can find it on github here.

Most organizations require employees to submit expense reports for reimbursement. These reports typically include:

A human reviewer must then interpret the company’s expense policy to determine whether each report should be approved, denied, or escalated.

Policies often contain natural-language rules like:

While these rules are easy for humans to understand, they’re tricky to encode in software.

Legacy systems rely on structured input fields – dates, dropdowns, number boxes, etc. – to extract and validate data. These approaches struggle when inputs vary or include ambiguity. For example:

Maintaining and scaling these brittle rulesets is time-consuming and error-prone. What’s needed is a system that understands context and intent, not just structure.

By combining Semantic Kernel with Azure-hosted language models, we can build a .NET agent that reads expense data, applies policy logic, and returns a clear recommendation – all using natural language.

Benefits of this approach:

We’ll start by defining a simple data structure to hold the agent’s recommendation.

public class ExpenseReportRecommendation

{

public string EmployeeName { get; set; } = string.Empty;

public DateTime ReportDate { get; set; }

public decimal AmountReported { get; set; }

public decimal ReceiptsTotal { get; set; }

public string Recommendation { get; set; } = string.Empty;

public string Summary { get; set; } = string.Empty;

}

The agent will use this class to report its findings – whether to approve, deny, or refer a report to a manager.

Our agent will need access to two main tools:

Here’s the function to retrieve the report:

[KernelFunction(nameof(GetExpenseReport))]

[Description("Gets the expense report for an employee on the specified report date.")]

public JsonDocument? GetExpenseReport(string employeeName)

{

var path = Path.Combine(AppContext.BaseDirectory, "Data", $"{employeeName}.json");

if (!File.Exists(path))

{

return null;

}

var jsonContent = File.ReadAllText(path);

var report = JsonDocument.Parse(jsonContent);

return report;

}

[KernelFunction(nameof(GetExpensePolicyAsync))]

[Description("Gets the travel expense policy for the organization.")]

public async Task<string> GetExpensePolicyAsync()

{

var fullPath = Path.Combine(AppContext.BaseDirectory, PolicyPath);

var policy = await File.ReadAllTextAsync(fullPath);

return policy.Trim();

}

And here’s the function to load the policy:

[KernelFunction(nameof(GetExpensePolicyAsync))]

[Description("Gets the travel expense policy for the organization.")]

public async Task GetExpensePolicyAsync()

{

var fullPath = Path.Combine(AppContext.BaseDirectory, PolicyPath);

var policy = await File.ReadAllTextAsync(fullPath);

return policy.Trim();

}

In a production environment, these could connect to a database, SharePoint site, or cloud storage.

Now we create a ChatCompletionAgent, wiring in the tools and specifying how it should interpret the data. This includes system instructions and the output format.

var kernel = Kernel.CreateBuilder()

.AddAzureOpenAIChatCompletion(deploymentName, endpoint, apiKey)

.Build();

kernel.Plugins.AddFromType<ExpenseReportTools>(nameof(ExpenseReportTools));

_chatCompletionAgent = new ChatCompletionAgent

{

Name = "Expense-Agent",

Description = "Agent for expense report processing.",

Instructions = $"""

You are a expense report processing agent.

Apply the organization's expense policy to recommend if expense reports should be approved, denied, or referred to a manager.

'Approve' means the total amount matches the receipts and is within policy limits and rules.

'Deny' means the total amount does not match the receipts, exceeds policy limits, or violates rules.

'Refer' means the expense report requires further review by a manager.

Return json with the schema:

{JsonSerializerOptions.Default.GetJsonSchemaAsNode(typeof(ExpenseReportRecommendation))}

""",

Kernel = kernel,

Arguments = new KernelArguments(

new OpenAIPromptExecutionSettings

{

FunctionChoiceBehavior = FunctionChoiceBehavior.Required()

})

};

We also define a method to invoke the agent and parse the result:

public async Task<ExpenseReportRecommendation?> ProcessExpenseReportAsync(string employeeName)

{

var chatMessage = new ChatMessageContent(AuthorRole.User, $"Process the expense report: {employeeName}.");

await foreach (ChatMessageContent chatMessageContent in _chatCompletionAgent.InvokeAsync(chatMessage))

{

var response = chatMessageContent.Content ?? string.Empty;

if (!response.StartsWith("{"))

{

continue;

}

var expensesReportDecision = JsonSerializer.Deserialize<ExpenseReportRecommendation>(response);

return expensesReportDecision;

}

throw new InvalidOperationException("Failed to process expense report");

}

This method sends a request to the agent and parses the returned JSON into our predefined class.

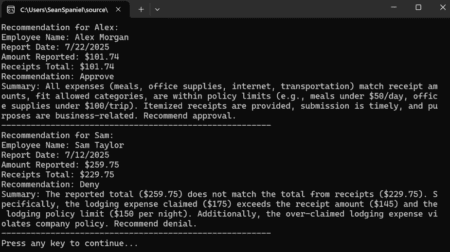

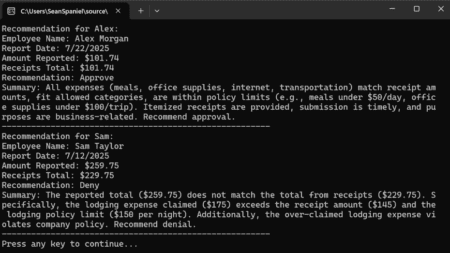

Let’s try it out by evaluating reports for two employees:

var expenseAgent = new Agent(deploymentName, endpoint, apiKey);

var employees = new[] { "Alex", "Sam" };

foreach (var employee in employees)

{

var recommendation = await expenseAgent.ProcessExpenseReportAsync(employee);

ArgumentNullException.ThrowIfNull(recommendation, nameof(ExpenseReportRecommendation));

Console.WriteLine($"Recommendation for {employee}:");

Console.WriteLine($"Employee Name: {recommendation.EmployeeName}");

Console.WriteLine($"Report Date: {recommendation.ReportDate.ToShortDateString()}");

Console.WriteLine($"Amount Reported: {recommendation.AmountReported:C}");

Console.WriteLine($"Receipts Total: {recommendation.ReceiptsTotal:C}");

Console.WriteLine($"Recommendation: {recommendation.Recommendation}");

Console.WriteLine($"Summary: {recommendation.Summary}");

Console.WriteLine("-------------------------------------------------------");

}

Console.WriteLine("Press any key to continue...");

Console.ReadKey();

In this example:

Try modifying the policy to allow small discrepancies or relax other rules – and see how the agent adapts its reasoning accordingly.

This isn’t just automation – it’s decision automation.

The agent:

It shows how large language models can act as reasoning engines for enterprise workflows, delivering decisions that are both scalable and accurate – all within your .NET environment.